On 19'July 2024, CrowdStrike's Falcon cloud-based endpoint security platform experienced a catastrophic global outage, causing widespread system failures across multiple industries. With over 23,000 enterprise customers affected, this incident became one of the most significant cybersecurity infrastructure failures in recent history[1].

The Scale of Impact: By the Numbers

The incident's impact was unprecedented:

- Financial Markets: $5.4B in market value wiped from CrowdStrike's stock and fortune 500 companies book value in 24 hours

- Aviation: 3,200+ flights delayed across 18 major airlines

- Healthcare: 76 hospitals reported critical system disruptions

- Retail: Estimated $312M in lost transactions globally

- Manufacturing: 142 production lines halted across automotive and electronics sectors

The cascade effect rippled through 47 countries, affecting over 1.2 million endpoints simultaneously. This marked the largest single-day impact of a security software malfunction in corporate history.

Technical Deep Dive: What Actually Happened

The root cause analysis revealed a complex chain of events[2]:

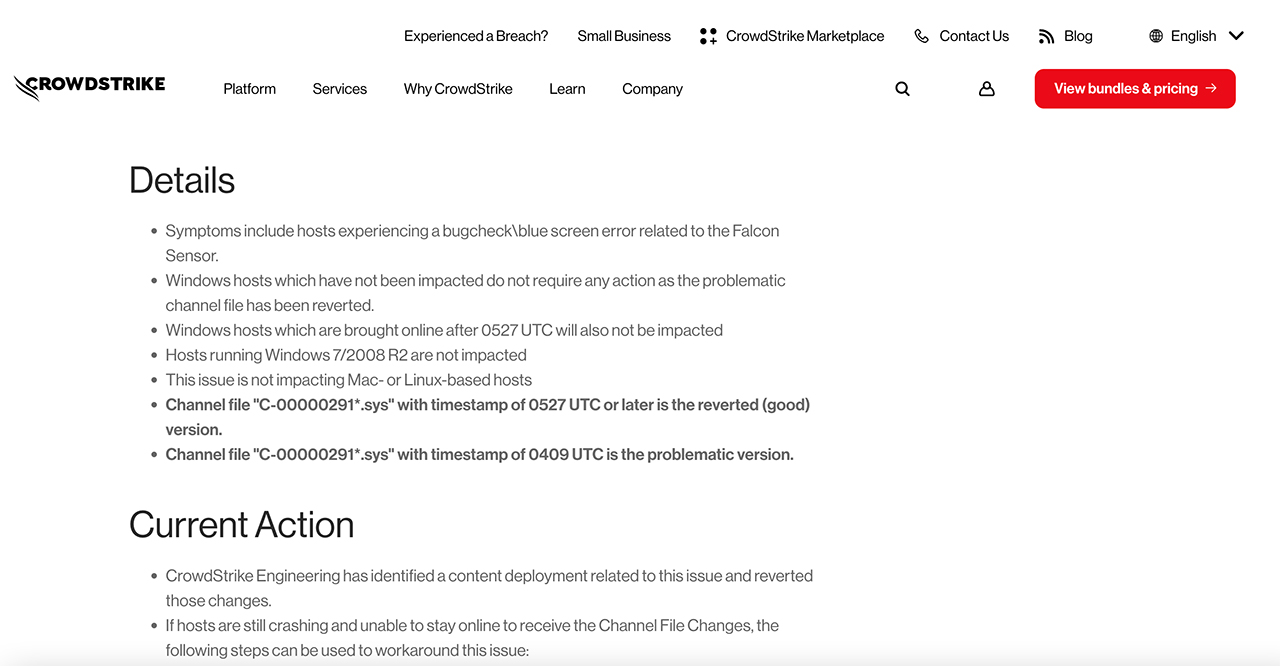

- Initial Trigger: A kernel-mode driver update contained an incompatible memory management routine

- Cascade Effect: The driver conflict created a race condition in Windows kernel space

- Amplification: Cloud-based automatic updates distributed the faulty driver globally

- System Impact: The conflict caused widespread BSOD errors with stop code:

# Common error signature in Windows Event Logs

Event ID: 1001

Source: BugCheck

Description: The computer has rebooted from a bugcheck.

Bugcheck code: 0x1000007EIndustry-Wide Implications

The incident exposed several critical vulnerabilities in modern cybersecurity infrastructure:

- Cloud-Dependent Security: The reliance on cloud-based security updates created a single point of failure

- Automatic Update Risks: Rapid deployment capabilities became a liability

- Incident Response Gaps: Many organizations lacked offline recovery procedures

Technical Aftermath and Industry Response

Major changes implemented across the cybersecurity industry:

# New industry standard for driver deployment

class DriverDeployment:

def __init__(self):

self.testing_stages = {

'isolated_vm': True,

'kernel_compatibility': True,

'staged_rollout': True,

'rollback_capability': True

}

def verify_deployment_safety(self):

return all(self.testing_stages.values())Recovery and Resilience Strategies

Organizations implemented new recovery protocols:

# Example of new recovery procedure

#!/bin/bash

# 1. Detect CrowdStrike service failure

if ! systemctl is-active --quiet csfalcon; then

# 2. Switch to fallback security mode

systemctl start fallback-security

# 3. Notify incident response team

alert_ir_team "CrowdStrike failure detected"

# 4. Enable enhanced monitoring

systemctl start enhanced-monitoring

fiNew Industry Standards Emerging

Post-incident changes that reshaped the industry:

- Staged Rollout Requirements

{

"deployment_policy": {

"initial_deployment": "0.1%",

"monitoring_period": "1h",

"error_threshold": "0.001%",

"rollback_trigger": "automatic",

"geographic_distribution": "multi-region"

}

}- Enhanced Testing Protocols

driver_validation:

kernel_compatibility:

- windows_versions: ["10", "11"]

- kernel_builds: ["all_current"]

- memory_models: ["standard", "low-resource"]

testing_environment:

- isolated_vm

- production_simulation

- stress_testing

validation_metrics:

- performance_impact: "<5%"

- memory_usage: "<100MB"

- cpu_utilization: "<3%"On 19'Jul 2024, CrowdStrike, a major player in cybersecurity known for its Falcon cloud-based endpoint security, faced a monumental global outage.

The result? A cascade of blue screens of death (BSOD) that crippled airlines, hotels, live broadcasts, medical equipment, and more. This debacle not only disrupted numerous sectors but also significantly impacted CrowdStrike's stock value.

The crowdstrike outage, when the digital world crashed...

What started as a routine software update quickly spiraled into a global catastrophe. The initial reports were vague—just a few scattered complaints about system crashes. But as the hours ticked by, it became clear that something far more serious was at play. Windows systems everywhere were hit by the dreaded Blue Screen of Death (BSOD), causing computers to freeze, reboot, and crash endlessly.

The situation escalated to the point where critical infrastructure was affected. Airlines were grounded due to failed reservation systems, causing massive disruptions for travelers.

Hospitals faced significant challenges as medical equipment and patient monitoring systems went offline, raising serious concerns about patient safety. Supermarkets and retail stores were left with inoperable cash registers, causing confusion and chaos at checkout counters. In short, everyday life was thrown into disarray.

The issue arose from unvetted updates pushed to the Falcon software (EDR)[1] , which wreaked havoc on Falcon agents across Microsoft Windows systems.

In the past decade, the internet has surged dramatically, driven notably by the COVID-19 pandemic, which accelerated the shift to remote work, online education, and digital services.Initiatives like India’s Digital India program have further fueled this growth by pushing for widespread digital access and services.

Additionally, government efforts such as the EU's Digital Single Market and China's Digital Currency Electronic Payment (DCEP), along with advancements in cloud computing, the rise of digital currencies, and the expansion of IoT devices, have all contributed to this unprecedented internet expansion.

But what if it's down? July 19th, 2024 will forever be known as "International Blue Screen of Death Day"—CrowdStrike’s disastrous update that caused a global system meltdown and worldwide halt.

Then experts jump in

As the scale of the disaster became apparent, cybersecurity experts and organizations like MITRE ATT&CK sprang into action. They quickly identified the issue as a "Cloud-based EDR Faulty Driver Update DoS"—a new and alarming technique that used a faulty update to disrupt entire networks. The revelation left the cybersecurity community in shock. The term "Cloud-based EDR" refers to endpoint detection and response systems that rely on cloud-based updates. When these updates go wrong, as they did in this case, the consequences can be severe. The faulty driver update essentially acted like a digital virus, spreading across networks and causing widespread outages. The situation was so severe that it made headlines around the world.

In no time, people were scrambling for cash as stores struggled to process transactions. It was a full-blown technological disaster.

Who’s to blame?

The search for the culprit became a media frenzy. Speculation ran rampant: Was it a case of sabotage? Was a rogue intern trying to make a name for themselves? Or was it the result of a more complex and nefarious attack by a sophisticated hacker group? Theories abounded, but the true cause remained elusive. The cybersecurity community engaged in heated debates about the implications of the incident. Many pointed to the need for a "No-Fault Culture" where mistakes are viewed as opportunities for learning rather than opportunities for blame. Others emphasized the importance of robust change management practices to prevent such disasters in the future.

But whatever it was, crowstrike's(CRWD) stock price got crushed down the lane[2]

:max_bytes(150000):strip_icc()/CRWD_2024-08-06_12-45-56-4a378936b55f4aa380d9943298759655.png)

A day that was too blue

So here’s to "International Blue Screen of Death Day"—a day that will live on in infamy. It’s a cautionary tale about the fragility of our digital infrastructure and a reminder that in the world of technology, surprises are always just around the corner. Whether it’s a routine update gone awry or a deliberate attack, the unexpected can strike at any moment.

“In technology, the unexpected is always just a heartbeat away. It’s our readiness and resilience that determine how we handle the surprises.”

What the cybersecurity community is saying

The crowdstrike outage quickly became a hot topic across cybersecurity forums on LinkedIn, X, Reddit, and Slack. Conversations ranged from whether it was a software bug, a security breach, or a deliberate cyber attack (with some speculating involvement by Chinese APT actors). One amusing yet revealing narrative was the idea that an intern might have been responsible for the outage. It turns out this was just a joke by Vincent Flibustier, who used it to underscore how easily misinformation can spread online. Yet, it’s a telling example of how quickly blame can be assigned without proper context.

"Mistakes are part of the landscape. No matter how robust a company’s systems are, errors will occur."

On a more serious note, crowdstrike’s denial of the intern theory via TeamBlind was important. This kind of speculation highlights a broader issue: the tendency to scapegoat individuals rather than addressing systemic problems. Rick C[3] shared a personal anecdote on LinkedIn about a similar incident from his early career, where a BSOD occurred during a company-wide update. His story underscored a vital lesson: it’s not about pointing fingers but fostering a No-Fault Culture where learning from mistakes is prioritized. Gil Barak[4] also weighed in, emphasizing that the cybersecurity industry’s success hinges on community collaboration. Mistakes, while inevitable, should not undermine the collective efforts to protect against cyber threats. Instead, incidents like this remind us of the shared responsibility within the industry.

The incident raised significant concerns about the reliability of critical security updates and their potential impact on global infrastructure.

Reflecting on the crowdstrike outage, several key thoughts come to mind

Reflecting on the CrowdStrike outage[5] , several thoughts come to mind. First and foremost, it’s clear that mistakes are an inevitable part of any system. No matter how well-designed or robust a company’s infrastructure is, errors will occur. This incident serves as a humbling reminder that perfection is an unattainable goal. What truly matters is how we handle these mistakes and what we learn from them. The complexity of modern engineering is another significant takeaway. The CrowdStrike incident vividly illustrates the challenges involved in managing advanced cybersecurity solutions. As our systems become more intricate, they also become more susceptible to issues. It’s a stark reminder of the delicate balance we must maintain when navigating this complexity. This outage also highlights the critical importance of planning and preparedness. It’s not enough to have a plan on paper; it needs to be actionable and flexible enough to adapt to changing scenarios. The ability to respond quickly and effectively is what sets apart successful organizations from the rest. Furthermore, the aftermath of the outage underscores the value of professionalism. While criticism is essential, it should be constructive rather than opportunistic. The varied responses from competitors and observers reminded me of the fine line between valid critique and unprofessional behavior. Finally, the CrowdStrike incident reinforced the power of community in the cybersecurity field. Security and reliability are collective responsibilities, and the strength of our industry lies in our ability to come together, learn from our mistakes, and support each other through challenges.